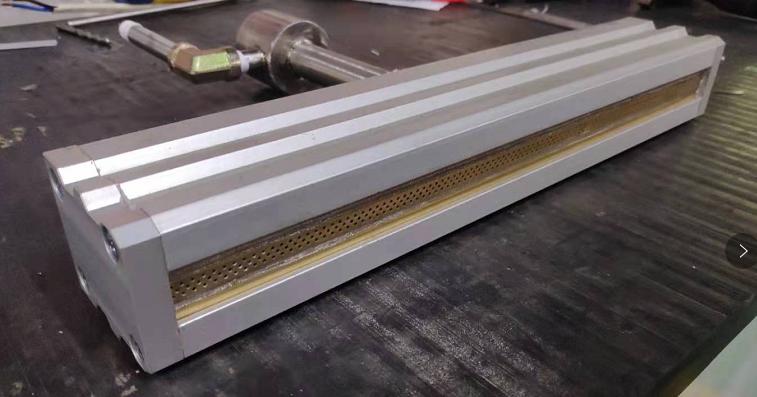

spark test machine

Understanding Spark Test Machines Enhancing Performance and Efficiency in Data Processing

In the era of big data, efficient data processing has become a critical consideration for organizations seeking to gain insights and enhance productivity. Apache Spark, one of the most popular open-source distributed computing frameworks, has made significant strides in providing scalable and fast data processing capabilities. However, to fully leverage the capabilities of Spark, organizations need to invest in the proper infrastructure, often referred to as Spark test machines.

What is a Spark Test Machine?

A Spark test machine is a dedicated machine or a cluster of machines optimized for running Apache Spark applications. These machines are configured to provide the necessary computational resources, such as CPU, memory, and storage, to handle large datasets efficiently. They allow developers and data engineers to test their Spark applications in an environment that closely resembles production, helping to identify performance bottlenecks and issues before deployment.

The Importance of Spark Test Machines

1. Performance Evaluation One of the primary benefits of utilizing Spark test machines is the ability to conduct performance evaluations. Testing applications in a controlled environment allows developers to run benchmarks and identify how their code behaves under various conditions. This is critical for optimizing algorithms and ensuring efficient resource utilization.

2. Resource Allocation Properly configured Spark test machines enable better resource allocation. Developers can experiment with different configurations, such as adjusting the number of executor cores, memory settings, and data partitioning strategies. Understanding the impact of these configurations on application performance is essential for maximizing efficiency.

3. Error Detection Debugging Spark applications can be challenging, especially in a production environment. Testing in a dedicated environment allows for isolation of issues without affecting live systems. Developers can easily identify bugs, performance issues, or misconfigurations and rectify them before deploying to production.

4. Scalability Testing Spark is known for its ability to scale horizontally across many nodes. Spark test machines can be configured to simulate varying numbers of nodes, enabling teams to understand how their applications will perform as data volumes grow. This helps in making informed decisions about scaling and infrastructure investments.

5. Cost Management By utilizing Spark test machines, organizations can better manage costs associated with cloud services. Testing performance and efficiency before launching applications in production can prevent over-provisioning of resources, which can lead to unnecessary expenditures. With proper benchmarking, businesses can determine the optimal setup that balances cost and performance.

spark test machine

Best Practices for Setting Up Spark Test Machines

To maximize the efficacy of Spark test machines, several best practices should be followed

1. Hardware Configuration Choose machines with adequate CPU and memory resources to simulate production loads effectively. The configuration should reflect actual production environments to ensure realistic testing outcomes.

2. Cluster Management Consider deploying a cluster management tool, such as Apache Mesos or Kubernetes, to streamline the management of resources across Spark test machines. This allows for dynamic resource allocation based on testing needs.

3. Data Simulations Use representative datasets that mimic the structure, size, and distribution of data in production. This will yield more reliable performance metrics.

4. Monitoring and Logging Implement robust monitoring and logging systems to gather insights during testing. Tools like Spark’s native UI, Ganglia, or Prometheus can provide valuable performance metrics for analysis.

5. Regularly Update and Maintain Regularly update software dependencies and maintain the test environment to reflect any changes made in the production setup. This helps to keep tests relevant and effective.

Conclusion

In conclusion, Spark test machines play a pivotal role in the efficient development and deployment of data processing applications using Apache Spark. By providing a controlled environment for performance testing, debugging, and resource optimization, organizations can significantly enhance the overall quality of their data solutions. Investing in the right infrastructure for Spark testing can lead to improved performance, reduced costs, and a more streamlined data processing workflow, ultimately driving better business outcomes in the age of big data.

-

Why the Conductor Resistance Constant Temperature Measurement Machine Redefines Precision

NewsJun.20,2025

-

Reliable Testing Starts Here: Why the High Insulation Resistance Measuring Instrument Is a Must-Have

NewsJun.20,2025

-

Flexible Cable Flexing Test Equipment: The Precision Standard for Cable Durability and Performance Testing

NewsJun.20,2025

-

Digital Measurement Projector: Precision Visualization for Modern Manufacturing

NewsJun.20,2025

-

Computer Control Electronic Tensile Tester: Precision and Power for the Modern Metal Industry

NewsJun.20,2025

-

Cable Spark Tester: Your Ultimate Insulation Assurance for Wire and Cable Testing

NewsJun.20,2025

Copyright © 2025 Hebei Fangyuan Instrument & Equipment Co.,Ltd. All Rights Reserved. Sitemap | Privacy Policy

Copyright © 2025 Hebei Fangyuan Instrument & Equipment Co.,Ltd. All Rights Reserved. Sitemap | Privacy Policy